Cancer du poumon

Le cancer du poumon est la principale cause de décès liés au cancer dans le monde et le deuxième cancer le plus répandu après le cancer du sein (Sung et al., 2021). Le tabagisme reste le principal facteur de risque de la maladie, environ les trois quarts des patients étant fumeurs ou ex-fumeurs (Siegel et al., 2021). D'autres facteurs de risque comprennent l'exposition à la fumée secondaire, aux polluants environnementaux, au radon et aux risques professionnels tels que l'amiante (Malhotra et al., 2016).

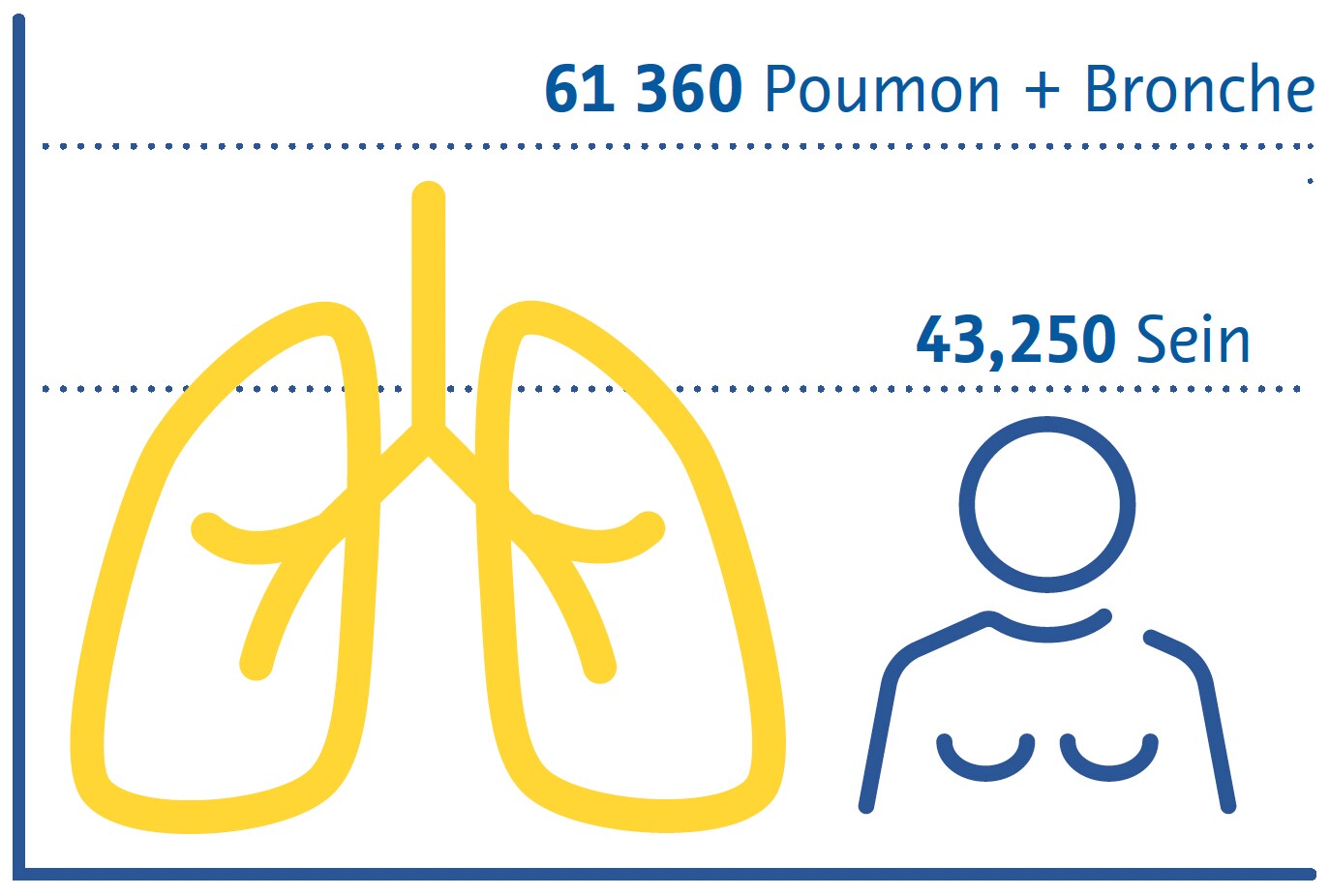

Estimation des décès dus au cancer aux États-Unis en 2022

Femmes

Il existe deux principaux sous-types de cancer du poumon : le cancer du poumon non à petites cellules (CPNPC) et le cancer du poumon à petites cellules (CPPC) (Thai et al., 2021). Le CPNPC est le plus répandu, représentant environ 85 % de tous les cas, et comprend l'adénocarcinome, le carcinome épidermoïde et le carcinome à grandes cellules (Thai et al., 2021). Le CPPC est la forme la plus agressive, souvent associée à une croissance tumorale rapide et à des métastases précoces (Thai et al., 2021).

Les caractéristiques cliniques du cancer du poumon sont souvent non spécifiques aux premiers stades, ce qui contribue à retarder son diagnostic (Hamilton et al., 2005). Les symptômes courants comprennent une toux persistante, des douleurs thoraciques, un essoufflement et des crachats de sang (Hamilton et al., 2005). Le diagnostic du cancer du poumon implique des études d'imagerie et des biopsies tissulaires ouvertes « bronchoscopiques » ou radioguidées essentielles pour confirmer le diagnostic et le sous-type histologique et orienter les décisions de traitement (Detterbeck et al., 2013).

Le traitement du cancer du poumon dépend du stade auquel il est diagnostiqué et des sous-types histologiques (Detterbeck et al., 2013). La chirurgie, la radiothérapie et la chimiothérapie sont les principales modalités thérapeutiques (Detterbeck et al., 2013 ; Thai et al., 2021). Le CPNPC à un stade précoce peut être traité par résection chirurgicale de la tumeur, tandis que les cas avancés nécessitent souvent une combinaison de chimiothérapie et de radiothérapie (Detterbeck et al., 2013 ; Thai et al., 2021). En raison de sa nature agressive, le CPPC est souvent traité par chimiothérapie, parfois en association avec une radiothérapie (Detterbeck et al., 2013 ; Thai et al., 2021).

L'immunothérapie est apparue comme une option prometteuse pour le traitement du cancer du poumon, en particulier du CPNPC (Thai et al., 2021 ; C. Wang et al., 2021). Les médicaments qui ciblent les points de contrôle immunitaires se sont révélés efficaces pour améliorer la survie de certains patients (Thai et al., 2021 ; C. Wang et al., 2021).

Des thérapies ciblées, axées sur des mutations ou altérations génétiques spécifiques, ont également été développées pour des sous-ensembles de patients atteints de cancer du poumon, offrant ainsi des options de traitement plus personnalisées et plus efficaces (Thai et al., 2021). Une approche multidisciplinaire impliquant des oncologues, des chirurgiens, des radiologues et d'autres professionnels de santé est essentielle pour adapter les plans de traitement aux besoins individuels des patients (Detterbeck et al., 2013).

Stratégies de dépistage

Les premiers essais cliniques de dépistage du cancer du poumon ont utilisé l'analyse des crachats et la radiographie thoracique conventionnelle et n'ont trouvé aucun lien entre le dépistage et la faible mortalité (Marcus et al., 2000). Il a été démontré dans des essais ultérieurs que grâce à sa capacité supérieure à détecter les nodules non calcifiés qui représentent potentiellement un cancer à un stade précoce (Henschke et al., 1999), le dépistage par tomodensitométrie (TDM) à faible dose réduit la mortalité liée au cancer du poumon de 20 % (National Lung Screening TriaResearch Team et. al., 2011 ; Aberle et. al. ; 2011) à 24 % (de Koning et. al., 2020). Bien que la TDM à faible dose soit plus coûteuse et implique une dose de rayonnement plus élevée que la radiographie conventionnelle, les risques associés à l'exposition aux rayonnements sont très faibles (Sands et al., 2021) et de vastes études ont montré que de telles stratégies de dépistage sont rentables (Black et al., 2014 ; Toumazis et al., 2021).

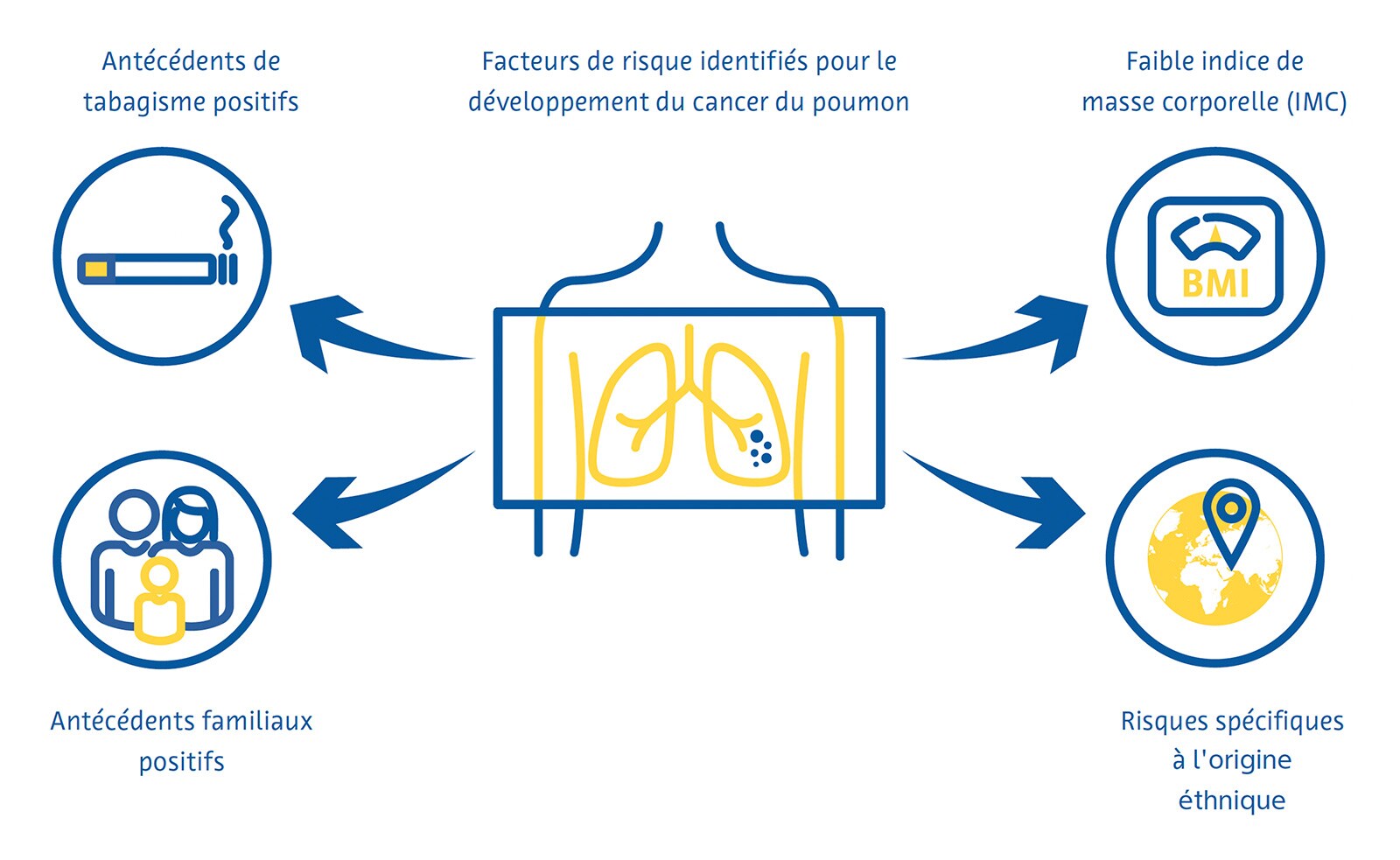

Le dépistage du cancer du poumon est plus efficace lorsqu’il cible les personnes présentant un risque élevé de développer la maladie. Les directives nationales actuelles en matière de dépistage dans de nombreux pays identifient en grande partie ces personnes à l'aide de critères dérivés des premiers essais cliniques sur le dépistage du cancer du poumon, notamment l'âge et les antécédents de tabagisme en paquets-années. Des chercheurs d'autres pays ont développé des modèles mathématiques pour estimer le risque de cancer du poumon afin de déterminer l'admissibilité au dépistage en incorporant des variables supplémentaires telles que l'origine éthnique, les antécédents personnels et familiaux de cancer du poumon et l'indice de masse corporelle (Cassidy et al., 2008 ; Field et al. ., 2016 ; Tammemägi et al., 2013).

Défis du dépistage

Malgré les preuves solides de l’efficacité du dépistage du cancer du poumon, sa mise en oeuvre pratique se heurte à plusieurs défis. L'engagement et l'adhésion aux programmes de dépistage du cancer du poumon sont faibles, avec un taux de participation de seulement 7 à 14 % aux États-Unis (J. Li et al., 2018 ; Zahnd et Eberth, 2019) et de faibles taux au Royaume-Uni, notamment parmi les personnes les plus à risque de cancer du poumon (Ali et al., 2015). Alors que de plus en plus de pays mettent en place des programmes nationaux de dépistage du cancer du poumon, la pénurie mondiale déjà importante de radiologues (AAMC Report Reinforces Mounting Physician Shortage, 2021 ; Smieli- auskas et al., 2014 ; The Royal College of Radiologists, 2022) risque de compliquer davantage le dépistage du cancer du poumon, car il y aura moins de radiologues qualifiés pour interpréter un nombre accru d'examens de TDM.

Cela est particulièrement problématique, car la déclaration des examens de dépistage du cancer du poumon nécessite une expertise et une formation spécifiques (LCS Project, n.d.). De plus, une charge de travail plus élevée est généralement associée à davantage d’erreurs de la part des radiologues (Hanna et al., 2018), ce qui peut entraîner de moins bons résultats en matière de dépistage. Il n’existe pas non plus de consensus sur la manière de traiter les découvertes fortuites identifiées lors du dépistage du cancer du poumon. Ces résultats donnent lieu à un bilan diagnostique plus approfondi chez jusqu'à 15 % des patients dépistés (Morgan et al., 2017) et sont associés à une anxiété accrue des patients et à des coûts pour le système de santé (Adams et al., 2016).

Rôle de l'intelligence artificielle

Identification des individus à haut risque

Aux États-Unis, les critères d'admissibilité au dépistage du cancer du poumon, qui émanent des Centers for Medicare et Medicaid Services (CMS), négligent plus de la moitié des cas de cancer du poumon (Y. Wang et al., 2015). Ces critères, qui incluent uniquement les antécédents de tabagisme et l'âge, fournissent une prévision du risque sous-optimale car ils ignorent d'autres facteurs de risque importants (Burzic et al., 2022) et sont souvent basés sur des données inexactes ou indisponibles (Kinsinger et al., 2017). Ceci est particulièrement important étant donné qu’environ un quart de tous les cas de cancer du poumon ne sont pas attribués au tabagisme (Sun et al., 2007).

C'est pourquoi des études se sont penchées sur l'utilisation de l'intelligence artificielle (IA) pour améliorer la prévision du risque de cancer du poumon et inclure davantage de personnes dans les programmes de dépistage. La combinaison d'informations provenant de dossiers médicaux électroniques et de radiographies thoraciques conventionnelles à l'aide d'un réseau neuronal convolutif (CNN) s'est avérée plus efficace que les critères de dépistage existants pour prédire le cancer du poumon sur une période de 12 ans et a été associée à une réduction de 31 % des cas de cancer du poumon non détectés (Lu et al., 2020). Une autre étude utilisant une approche similaire a révélé que les patients classés comme présentant un risque élevé de cancer du poumon en utilisant à la fois des radiographies thoraciques conventionnelles et les critères CMS, mais n'utilisant pas les critères CMS seuls, présentaient une incidence de cancer du poumon de 3,3 % sur 6 ans (Raghu et al., 2022), bien supérieure au seuil de 1,3 % de risque sur 6 ans pour le dépistage du cancer du poumon, similaire au seuil utilisé par l'USPSTF (United States Preventative Services Task Force) (Wood et al., 2018).

Réduction de la dose de rayonnement et amélioration de la qualité de l'image

Des techniques d'apprentissage profond peuvent être utilisées pour le débruitage des images, certaines solutions pour la tomodensitométrie thoracique étant disponibles dans le commerce (Nam et al., 2021). La reconstruction d'images basée sur l'apprentissage profond de la TDM à ultra-faible dose a augmenté le taux de détection des nodules et amélioré la précision de la mesure des nodules par rapport aux algorithmes de reconstruction conventionnels (Jiang et al., 2022). Le débruitage basé sur l'apprentissage profond de la TDM à ultra-faible dose a également montré une meilleure qualité d'image subjective que la TDM à ultra-faible dose sans débruitage (Hata et al., 2020).

Détection des nodules pulmonaires

Les nodules pulmonaires sont définis comme une petite masse (généralement moins de 3 centimètres), arrondie ou irrégulière dans le tissu pulmonaire qui peut être infectieuse, inflammatoire, congénitale ou néoplasique (Wyker & Henderson, 2022). La détection des nodules pulmonaires par les radiologues prend du temps et est sujette à des erreurs telles que la non-identification ou l'identification erronée de nodules potentiellement malins (Al Mohammad et al., 2019 ; Armato et al., 2009 ; Gierada et al., 2017 ; Leader et al., 2005).

Les petits nodules pulmonaires sont souvent invisibles sur les radiographies thoraciques conventionnelles, et même les nodules d’un volume important peuvent passer inaperçus sur les radiographies (Austin et al., 1992). Malgré cela, le potentiel de l’IA à détecter les nodules pulmonaires et les cancers sur la radiographie thoracique fait l’objet de recherches actives (Cha et al., 2019 ; Homayounieh et al., 2021 ; Jones et al., 2021 ; X. Li et al. , 2020 ; Mendoza & Pedrini, 2020 ; Nam et al., 2019 ; Yoo et al., 2021) en raison de son rôle d'examen d'imagerie de première intention dans les maladies respiratoires et cardiaques, de sa large disponibilité et de son faible coût (Ravin & Chotas, 1997).

Une méta-analyse de neuf études a révélé une ASC de 0,884 pour la détection de nodules pulmonaires sur des radiographies thoraciques conventionnelles utilisant l'IA (Aggarwal et al., 2021).

Une méta-analyse de 41 études a révélé un ratio de 55 à 99 % de sensibilité pour l'apprentissage automatique traditionnel et de 80 à 97 % pour les algorithmes d’apprentissage profond permettant d’identifier les nodules pulmonaires sur la TDM à faible dose (Pehrson et al., 2019). L'une des premières études à utiliser l'apprentissage profond pour détecter les nodules pulmonaires sur une TDM à faible dose a révélé une sensibilité de 98,3 % et un faux positif par examen en utilisant une combinaison de différents algorithmes (Setio et al., 2017). Les faux positifs ont tendance à être des vaisseaux sanguins, du tissu cicatriciel ou encore des sections de la paroi thoracique, des vertèbres ou du tissu médiastinal (Cui et al., 2022 ; L. Li et al., 2019 ; Setio et al., 2017).

Une méta-analyse incluant 56 études a révélé une ASC de 0,94 pour la détection des nodules pulmonaires par TDM à l'aide de l'apprentissage profond (Aggarwal et al., 2021). Cependant, très peu de ces études ont utilisé des données collectées de manière prospective ou validé les algorithmes sur un ensemble de données externes indépendantes (Aggarwal et al., 2021). Un algorithme d'apprentissage profond formé sur plus de 10 000 TDM thoraciques à faible dose a atteint une ASC de 0,86 à 0,94 pour la prédiction du cancer du poumon à 1 an en utilisant l'histopathologie comme norme de référence lorsqu'il a été testé sur trois ensembles de données de validation externes (Mikhael et al., 2023).

Une étude portant sur 346 personnes ayant participé à un programme de dépistage du cancer du poumon a révélé qu'un algorithme d'apprentissage profond était plus sensible aux nodules pulmonaires qu'une double lecture effectuée par deux radiologues hautement spécialisés en imagerie thoracique (86 % contre 79 %) mais un taux de fausses détections beaucoup plus élevé (1,53 contre 0,13 par examen d’imagerie) (L. Li et al., 2019). Une étude similaire portant sur 360 personnes a révélé qu'un algorithme d'apprentissage profond détectait des nodules pulmonaires sur une TDM à faible dose avec une sensibilité de 90 % et un taux de fausse détection de 1 par examen d’imagerie par rapport à une sensibilité de 76 % et un taux de fausse détection de 0,04 par examen d’imagerie lorsque les examens d’imagerie étaient relus en double par des paires composées d'un radiologue junior et d'un radiologue senior (Cui et al., 2022). La différence de sensibilité entre l'algorithme et les radiologues était particulièrement importante pour les nodules d'un diamètre compris entre 4 et 6 mm (86 % contre 59 %) (Cui et al., 2022).

Segmentation des nodules pulmonaires

Une mesure précise des nodules pulmonaires est importante pour surveiller la croissance des nodules au fil du temps, ainsi que pour guider la gestion de ces lésions (Bankier et al., 2017). Cependant, l’erreur estimée dans la mesure manuelle du diamètre des nodules pulmonaires est d’environ 1,5 mm, ce qui représente une erreur d’estimation importante de la taille des petits nodules (Bankier et al., 2017 ; Revel et al., 2004). De plus, se fier au diamètre des nodules peut ne pas refléter avec précision la croissance des nodules, car cela suppose que tous les nodules sont des sphères parfaites (Devaraj et al., 2017). À cet égard, la dernière version de LungRADS 2022 incluait la possibilité d'effectuer une évaluation volumétrique (mm3).

La segmentation des nodules pulmonaires permet une estimation précise de leur volume et constitue un processus en plusieurs étapes qui comprend la détection des nodules, un processus de « croissance de la région » par lequel les limites des nodules sont identifiées en exploitant les différences d'atténuation des tissus entre le nodule et le parenchyme pulmonaire environnant, et l'élimination des structures environnantes présentant une atténuation similaire, telles que les vaisseaux sanguins (Devaraj et al., 2017).

Les approches de segmentation simples qui reposent fortement sur les différences d'atténuation entre les nodules et le parenchyme pulmonaire environnant fonctionnent mal avec les nodules juxta-vasculaires et sub-solides (Devaraj et al., 2017). En revanche, les CNN, en particulier les algorithmes codeurs-décodeurs, atteignent de bien meilleures performances de segmentation avec des coefficients de similarité Dice (une mesure du chevauchement spatial) de 0,79 à 0,93 par rapport aux segmentations de vérité terrain réalisées par les radiologues (Dong et al., 2020 ; Gu et al., 2021). Plusieurs algorithmes de segmentation des nodules pulmonaires basés sur l'IA qui effectuent une volumétrie automatisée des nodules et un suivi longitudinal du volume sont disponibles sur le marché (Hwang et al., 2021 ; Jacobs et al., 2021 ; Murchison et al., 2022 ; Park et al., 2019 ; Röhrich et al., 2023 ; Singh et al., 2021).

Classification des nodules pulmonaires

Les facteurs pris en compte pour déterminer la probabilité qu'un nodule pulmonaire soit cancéreux comprennent sa taille, sa forme, sa composition, son emplacement et si et comment il change au fil du temps (Callister et al., 2015 ; Lung Rads, n.d.). Des études ont montré un accord modéré inter- et intra-observateur entre radiologues pour les caractéristiques utilisées pour estimer la probabilité qu'un nodule pulmonaire soit malin avec une classification discordante dans plus d'un tiers des nodules (van Riel et al., 2015). Plusieurs solutions basées sur l'IA disponibles sur le marché pour la classification des nodules pulmonaires, dont beaucoup incluent une évaluation du risque de malignité, sont actuellement disponibles (Adams et al., 2023 ; Hwang et al., 2021 ; Murchison et al., 2022 ; Park et al., 2019 ; Röhrich et al., 2023).

Un algorithme d'apprentissage profond formé sur les données de 943 patients et validé sur un ensemble de données indépendant de 468 patients a montré une précision globale de 78 à 80 % pour classer les nodules dans l’une des 6 catégories (solides, calcifiés, partiellement solides, non solides, périscissuraux ou spiculés) (Ciompi et al., 2017). La précision était la plus faible pour les nodules partiellement solides, spiculés et périscissuraux (Ciompi et al., 2017).

Un algorithme d'apprentissage profond testé sur 6 716 TDM à faible dose et validé sur un ensemble de données indépendant de 1 139 TDM à faible dose a montré une ASC de 0,94 pour la prédiction du risque de cancer du poumon en utilisant l'histopathologie comme norme de référence (Ardila et al., 2019). Lorsqu’il est disponible, l’algorithme intègre les informations provenant de TDM antérieures du même patient et, dans ces cas, ses performances étaient similaires à celles de six radiologues (Ardila et al., 2019). Lorsque l'imagerie préalable n'était pas disponible, l'algorithme avait un taux de faux positifs inférieur de 11 % et un taux de faux négatifs inférieur de 5 % à celui des radiologues (Ardila et al., 2019).

Une autre étude a utilisé un CNN tridimensionnel multitâche pour extraire les caractéristiques des nodules telles que la calcification, la lobulation, la sphéricité, la spiculation, les marges et la texture avec une précision « hors norme » de 91,3 % (Hussein et al., 2017).

L’étude a utilisé l’apprentissage par transfert d’un algorithme entraîné sur un million de vidéos et l’a testé sur un ensemble de données de plus de 1 000 TDM du thorax. La norme de référence était constituée de scores de malignité et de scores caractéristiques des nodules évalués par au moins trois radiologues (Hussein et al., 2017). À l'aide d'un CNN 3D interprétable multitâche testé sur le même ensemble de données sans apprentissage par transfert, une autre étude a simultanément segmenté les nodules pulmonaires, prédit la probabilité de malignité et généré des attributs descriptifs des nodules, atteignant un coefficient de similarité Dice de 0,74 et une précision « hors norme » de 97,6 % (Wu et al., 2018).

Défis et orientations futures

Sur la base de toutes les preuves disponibles, certains pays ont commencé à intégrer l’utilisation de l’IA dans leurs programmes nationaux de dépistage. Dans cette optique, il convient de mentionner la récente publication d'une loi fédérale par le ministère fédéral allemand de la Justice, qui inclut l'utilisation d'un logiciel de détection assistée par ordinateur des nodules pulmonaires dans le nouveau programme de dépistage du cancer du poumon, pour la détection et la volumétrie des nodules pulmonaires, la détermination du temps de doublement du volume et le stockage de l'évaluation pour un rapport structuré (https://www.recht.bund.de/ bgbl/1/2024/162/VO.html).

Malgré les résultats très encourageants obtenus ces dernières années dans l’amélioration du dépistage du cancer du poumon grâce à l’intelligence artificielle, plusieurs défis méthodologiques importants demeurent.

La simple hétérogénéité des études menées jusqu’à présent rend difficile la synthèse des preuves par méta- analyse (Aggarwal et al., 2021). De plus, on ne sait pas exactement dans quelle mesure ces algorithmes sont généralisables, car la majorité des études manquent de validation externe solide (Aggarwal et al., 2021). Il est également nécessaire d'étudier la valeur supplémentaire de certains de ces algorithmes au-delà des améliorations des performances diagnostiques, par exemple en termes d'efficacité accrue ou de réduction des coûts (National Institute for Health and Care Excellence [NICE], n.d.).

Les nodules sub-solides, y compris les nodules en verre dépoli pur, sont plus susceptibles d'être malins que les nodules solides (Henschke et al., 2002). Cependant, comme les différences d’atténuation entre ces nodules et le parenchyme pulmonaire environnant sont très subtiles, elles sont particulièrement difficiles à détecter (de Margerie-Mellon & Chassagnon, 2023 ; L. Li et al., 2019 ; Setio et al., 2017). Certains algorithmes automatisés se sont révélés prometteurs pour la détection des nodules sub-solides, mais doivent encore être largement validés (Qi et al., 2020, 2021).

Conclusion

L’utilisation de l’intelligence artificielle pourrait aider à identifier davantage de personnes présentant un risque élevé de cancer du poumon et à améliorer la qualité des examens de dépistage. L’IA s’est avérée particulièrement utile pour identifier et segmenter les nodules pulmonaires potentiellement malins, dépassant souvent la sensibilité des radiologues (Cui et al., 2022 ; L. Li et al., 2019). Elle s’est également révélée prometteuse dans l’estimation de la probabilité de malignité des nodules pulmonaires, en particulier lorsque des images préalables sont disponibles.

Les recherches futures devraient viser à combler les lacunes des études antérieures, notamment le manque de validation externe solide et la négligence des résultats liés à l'efficacité et aux coûts, ouvrant la voie à un diagnostic plus précoce et à un meilleur traitement du cancer du poumon.

Références

AAMC Report Reinforces Mounting Physician Shortage. (2021). AAMC. https://www.aamc.org/news-insights/press-releases/aamcreport- reinforces-mounting-physician-shortage, accessed on 26.09.2024

Adams, S. J., Babyn, P. S., & Danilkewich, A. (2016). Toward a comprehensive management strategy for incidental findings in imaging. Canadian Family Physician Medecin de Famille Canadien, 62(7), 541–543.

Adams, S. J., Madtes, D. K., Burbridge, B., Johnston, J., Goldberg, I. G., Siegel, E. L., Babyn, P., Nair, V. S., & Calhoun, M. E. (2023). Clinical Impact and Generalizability of a Computer-Assisted Diagnostic Tool to Risk-Stratify Lung Nodules With CT. Journal of the American College of Radiology: JACR, 20(2), 232–242. https:// doi.org/10.1016/j.jacr.2022.08.006

Aggarwal, R., Farag, S., Martin, G., Ashrafian, H., & Darzi, A. (2021). Patient Perceptions on Data Sharing and Applying Artificial Intelligence to Health Care Data: Cross-sectional Survey. Journal of Medical Internet Research, 23(8), e26162. https://doi.org/10.2196/26162

Aggarwal, R., Sounderajah, V., Martin, G., Ting, D. S. W., Karthikesalingam, A., King, D., Ashrafian, H., & Darzi, A. (2021). Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digital Medicine, 4(1), 65. https://doi.org/10.1038/s41746-021-00438-z

Ali, N., Lifford, K. J., Carter, B., McRonald, F., Yadegarfar, G., Baldwin, D. R., Weller, D., Hansell, D. M., Duffy, S. W., Field, J. K., & Brain, K. (2015). Barriers to uptake among high-risk individuals declining participation in lung cancer screening: a mixed methods analysis of the UK Lung Cancer Screening (UKLS) trial. BMJ Open, 5(7), e008254. https://doi.org/10.1136/ bmjopen-2015-008254

Al Mohammad, B., Hillis, S. L., Reed, W., Alakhras, M., & Brennan, P. C. (2019). Radiologist performance in the detection of lung cancer using CT. Clinical Radiology, 74(1), 67–75. https://doi.org/10.1016/j.crad.2018.10.008

Ardila, D., Kiraly, A. P., Bharadwaj, S., Choi, B., Reicher, J. J., Peng, L., Tse, D., Etemadi, M., Ye, W., Corrado, G., Naidich, D. P., & Shetty, S. (2019). End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nature Medicine, 25(6), 954–961. https://doi.org/10.1038/s41591-019-0447-x

Armato, S. G., 3rd, Roberts, R. Y., Kocherginsky, M., Aberle, D. R., Kazerooni, E. A., Macmahon, H., van Beek, E. J. R., Yankelevitz, D., McLennan, G., McNitt-Gray, M. F., Meyer, C. R., Reeves, A. P., Caligiuri, P., Quint, L. E., Sundaram, B., Croft, B. Y., & Clarke, L. P. (2009). Assessment of radiologist performance in the detection of lung nodules: dependence on the definition of “truth.” Academic Radiology, 16(1), 28–38. https://doi.org/10.1016/j.acra.2008.05.022

Austin, J. H., Romney, B. M., & Goldsmith, L. S. (1992). Missed bronchogenic carcinoma: radiographic findings in 27 patients with a potentially resectable lesion evident in retrospect. Radiology, 182(1), 115–122. https://doi.org/10.1148/radiology.182.1.1727272

Bankier, A. A., MacMahon, H., Goo, J. M., Rubin, G. D., Schaefer- Prokop, C. M., & Naidich, D. P. (2017). Recommendations for Measuring Pulmonary Nodules at CT: A Statement from the Fleischner Society. Radiology, 285(2), 584–600. https://doi. org/10.1148/radiol.2017162894

Black, W. C., Gareen, I. F., Soneji, S. S., Sicks, J. D., Keeler, E. B., Aberle, D. R., Naeim, A., Church, T. R., Silvestri, G. A., Gorelick, J., Gatsonis, C., & National Lung Screening Trial Research Team. (2014). Cost-effectiveness of CT screening in the National Lung Screening Trial. The New England Journal of Medicine, 371(19), 1793–1802. https://doi.org/10.1056/NEJMoa1312547

Burzic, A., O’Dowd, E. L., & Baldwin, D. R. (2022). The Future of Lung Cancer Screening: Current Challenges and Research Priorities. Cancer Management and Research, 14, 637–645. https://doi.org/10.2147/CMAR.S293877

Callister, M. E. J., Baldwin, D. R., Akram, A. R., Barnard, S., Cane, P., Draffan, J., Franks, K., Gleeson, F., Graham, R., Malhotra, P., Prokop, M., Rodger, K., Subesinghe, M., Waller, D., Woolhouse, I., British Thoracic Society Pulmonary Nodule Guideline Development Group, & British Thoracic Society Standards of Care Committee. (2015). British Thoracic Society guidelines for the investigation and management of pulmonary nodules. Thorax, 70 Suppl 2, ii1–ii54. https://doi.org/10.1136/thoraxjnl-2015-207168

Cassidy, A., Myles, J. P., van Tongeren, M., Page, R. D., Liloglou, T., Duffy, S. W., & Field, J. K. (2008). The LLP risk model: an individual risk prediction model for lung cancer. British Journal of Cancer, 98(2), 270–276. https://doi.org/10.1038/sj.bjc.6604158

Cha, M. J., Chung, M. J., Lee, J. H., & Lee, K. S. (2019). Performance of Deep Learning Model in Detecting Operable Lung Cancer With Chest Radiographs. Journal of Thoracic Imaging, 34(2), 86–91. https://doi.org/10.1097/RTI.0000000000000388

Ciompi, F., Chung, K., van Riel, S. J., Setio, A. A. A., Gerke, P. K., Jacobs, C., Scholten, E. T., Schaefer-Prokop, C., Wille, M. M. W., Marchianò, A., Pastorino, U., Prokop, M., & van Ginneken, B. (2017). Towards automatic pulmonary nodule management in lung cancer screening with deep learning. Scientific Reports, 7, 46479. https://doi.org/10.1038/srep46479

Cui, X., Zheng, S., Heuvelmans, M. A., Du, Y., Sidorenkov, G., Fan, S., Li, Y., Xie, Y., Zhu, Z., Dorrius, M. D., Zhao, Y., Veldhuis, R. N. J., de Bock, G. H., Oudkerk, M., van Ooijen, P. M. A., Vliegenthart, R., & Ye, Z. (2022). Performance of a deep learning-based lung nodule detection system as an alternative reader in a Chinese lung cancer screening program. European Journal of Radiology, 146, 110068. https://doi.org/10.1016/j. ejrad.2021.110068

de Koning, H. J., van der Aalst, C. M., de Jong, P. A., Scholten, E. T., Nackaerts, K., Heuvelmans, M. A., Lammers, J.-W. J., Weenink, C., Yousaf-Khan, U., Horeweg, N., van ’t Westeinde, S., Prokop, M., Mali, W. P., Mohamed Hoesein, F. A. A., van Ooijen, P. M. A., Aerts, J. G. J. V., den Bakker, M. A., Thunnissen, E., Verschakelen, J., … Oudkerk, M. (2020). Reduced Lung-Cancer Mortality with Volume CT Screening in a Randomized Trial. The New England Journal of Medicine, 382(6), 503–513. https://doi. org/10.1056/NEJMoa1911793

de Margerie-Mellon, C., & Chassagnon, G. (2023). Artificial intelligence: A critical review of applications for lung nodule and lung cancer. Diagnostic and Interventional Imaging, 104(1), 11–17. https://doi.org/10.1016/j.diii.2022.11.007

Detterbeck, F. C., Lewis, S. Z., Diekemper, R., Addrizzo-Harris, D., & Alberts, W. M. (2013). Executive Summary: Diagnosis and management of lung cancer, 3rd ed: American College of Chest Physicians evidence-based clinical practice guidelines. Chest, 143(5 Suppl), 7S – 37S. https://doi.org/10.1378/chest.12-2377

Devaraj, A., van Ginneken, B., Nair, A., & Baldwin, D. (2017). Use of Volumetry for Lung Nodule Management: Theory and Practice. Radiology, 284(3), 630–644. https://doi.org/10.1148/ radiol.2017151022

Dong, X., Xu, S., Liu, Y., Wang, A., Saripan, M. I., Li, L., Zhang, X., & Lu, L. (2020). Multi-view secondary input collaborative deep learning for lung nodule 3D segmentation. Cancer Imaging: The Official Publication of the International Cancer Imaging Society, 20(1), 53. https://doi.org/10.1186/s40644-020-00331-0

Field, J. K., Duffy, S. W., Baldwin, D. R., Whynes, D. K., Devaraj, A., Brain, K. E., Eisen, T., Gosney, J., Green, B. A., Holemans, J. A., Kavanagh, T., Kerr, K. M., Ledson, M., Lifford, K. J., McRonald, F. E., Nair, A., Page, R. D., Parmar, M. K. B., Rassl, D. M., … Hansell, D. M. (2016). UK Lung Cancer RCT Pilot Screening Trial: baseline findings from the screening arm provide evidence for the potential implementation of lung cancer screening. Thorax, 71(2), 161–170. https://doi.org/10.1136/thoraxjnl-2015-207140

Gierada, D. S., Pinsky, P. F., Duan, F., Garg, K., Hart, E. M., Kazerooni, E. A., Nath, H., Watts, J. R., Jr, & Aberle, D. R. (2017). Interval lung cancer after a negative CT screening examination: CT findings and outcomes in National Lung Screening Trial participants. European Radiology, 27(8), 3249–3256. https://doi. org/10.1007/s00330-016-4705-8

Gu, D., Liu, G., & Xue, Z. (2021). On the performance of lung nodule detection, segmentation and classification. Computerized Medical Imaging and Graphics: The Official Journal of the Computerized Medical Imaging Society, 89, 101886. https://doi. org/10.1016/j.compmedimag.2021.101886

Hamilton, W., Peters, T. J., Round, A., & Sharp, D. (2005). What are the clinical features of lung cancer before the diagnosis is made? A population based case-control study. Thorax, 60(12), 1059–1065. https://doi.org/10.1136/thx.2005.045880

Hanna, T. N., Lamoureux, C., Krupinski, E. A., Weber, S., & Johnson, J.-O. (2018). Effect of Shift, Schedule, and Volume on Interpretive Accuracy: A Retrospective Analysis of 2.9 Million Radiologic Examinations. Radiology, 287(1), 205–212. https://doi. org/10.1148/radiol.2017170555

Hata, A., Yanagawa, M., Yoshida, Y., Miyata, T., Tsubamoto, M., Honda, O., & Tomiyama, N. (2020). Combination of Deep Learning-Based Denoising and Iterative Reconstruction for Ultra-Low-Dose CT of the Chest: Image Quality and Lung-RADS Evaluation. AJR. American Journal of Roentgenology, 215(6), 1321–1328. https://doi.org/10.2214/AJR.19.22680

Henschke, C. I., McCauley, D. I., Yankelevitz, D. F., Naidich, D. P., McGuinness, G., Miettinen, O. S., Libby, D. M., Pasmantier, M. W., Koizumi, J., Altorki, N. K., & Smith, J. P. (1999). Early Lung Cancer Action Project: overall design and findings from baseline screening. The Lancet, 354(9173), 99–105. https://doi.org/10.1016/S0140-6736(99)06093-6

Henschke, C. I., Yankelevitz, D. F., Mirtcheva, R., McGuinness, G., McCauley, D., & Miettinen, O. S. (2002). CT Screening for Lung Cancer. American Journal of Roentgenology, 178(5), 1053–1057. https://doi.org/10.2214/ajr.178.5.1781053

Homayounieh, F., Digumarthy, S., Ebrahimian, S., Rueckel, J., Hoppe, B. F., Sabel, B. O., Conjeti, S., Ridder, K., Sistermanns, M., Wang, L., Preuhs, A., Ghesu, F., Mansoor, A., Moghbel, M., Botwin, A., Singh, R., Cartmell, S., Patti, J., Huemmer, C., … Kalra, M. (2021). An Artificial Intelligence-Based Chest X-ray Model on Human Nodule Detection Accuracy From a Multicenter Study. JAMA Network Open, 4(12), e2141096. https://doi.org/10.1001/ jamanetworkopen.2021.41096

Hussein, S., Cao, K., Song, Q., & Bagci, U. (2017). Risk Stratification of Lung Nodules Using 3D CNN-Based Multi-task Learning. Information Processing in Medical Imaging, 249–260. https://doi.org/10.1007/978-3-319-59050-9_20

Hwang, E. J., Goo, J. M., Kim, H. Y., Yi, J., Yoon, S. H., & Kim, Y. (2021). Implementation of the cloud-based computerized interpretation system in a nationwide lung cancer screening with low-dose CT: comparison with the conventional reading system. European Radiology, 31(1), 475–485. https://doi.org/10.1007/ s00330-020-07151-7

Jacobs, C., Schreuder, A., van Riel, S. J., Scholten, E. T., Wittenberg, R., Wille, M. M. W., de Hoop, B., Sprengers, R., Mets, O. M., Geurts, B., Prokop, M., Schaefer-Prokop, C., & van Ginneken, B. (2021). Assisted versus Manual Interpretation of Low- Dose CT Scans for Lung Cancer Screening: Impact on Lung-RADS Agreement. Radiology. Imaging Cancer, 3(5), e200160. https://doi. org/10.1148/rycan.2021200160

Jiang, B., Li, N., Shi, X., Zhang, S., Li, J., de Bock, G. H., Vliegenthart, R., & Xie, X. (2022). Deep Learning Reconstruction Shows Better Lung Nodule Detection for Ultra-Low-Dose Chest CT. Radiology, 303(1), 202–212. https://doi.org/10.1148/radiol.210551

Jones, C. M., Buchlak, Q. D., Oakden-Rayner, L., Milne, M., Seah, J., Esmaili, N., & Hachey, B. (2021). Chest radiographs and machine learning - Past, present and future. Journal of Medical Imaging and Radiation Oncology, 65(5), 538–544. https://doi. org/10.1111/1754-9485.13274

Kinsinger, L. S., Anderson, C., Kim, J., Larson, M., Chan, S. H., King, H. A., Rice, K. L., Slatore, C. G., Tanner, N. T., Pittman, K., Monte, R. J., McNeil, R. B., Grubber, J. M., Kelley, M. J., Provenzale, D., Datta, S. K., Sperber, N. S., Barnes, L. K., Abbott, D. H., … Jackson, G. L. (2017). Implementation of Lung Cancer Screening in the Veterans Health Administration. JAMA Internal Medicine, 177(3), 399–406. https://doi.org/10.1001/ jamainternmed.2016.9022

LCS Project. (n.d.). https://www.myesti.org/lungcancerscreeningcertificationproject/, accessed on 26.09.2024

Leader, J. K., Warfel, T. E., Fuhrman, C. R., Golla, S. K., Weissfeld, J. L., Avila, R. S., Turner, W. D., & Zheng, B. (2005). Pulmonary nodule detection with low-dose CT of the lung: agreement among radiologists. AJR. American Journal of Roentgenology, 185(4), 973–978. https://doi.org/10.2214/AJR.04.1225

Li, J., Chung, S., Wei, E. K., & Luft, H. S. (2018). New recommendation and coverage of low-dose computed tomography for lung cancer screening: uptake has increased but is still low. BMC Health Services Research, 18(1), 525. https://doi.org/10.1186/ s12913-018-3338-9

Li, L., Liu, Z., Huang, H., Lin, M., & Luo, D. (2019). Evaluating the performance of a deep learning-based computer-aided diagnosis (DL-CAD) system for detecting and characterizing lung nodules: Comparison with the performance of double reading by radiologists. Thoracic Cancer, 10(2), 183–192. https://doi. org/10.1111/1759-7714.12931

Li, X., Shen, L., Xie, X., Huang, S., Xie, Z., Hong, X., & Yu, J. (2020). Multi-resolution convolutional networks for chest X-ray radiograph based lung nodule detection. Artificial Intelligence in Medicine, 103, 101744. https://doi.org/10.1016/j. artmed.2019.101744

Lu, M. T., Raghu, V. K., Mayrhofer, T., Aerts, H. J. W. L., & Hoffmann, U. (2020). Deep Learning Using Chest Radiographs to Identify High-Risk Smokers for Lung Cancer Screening Computed Tomography: Development and Validation of a Prediction Model. Annals of Internal Medicine, 173(9), 704–713. https://doi. org/10.7326/M20-1868

Lung Rads. (n.d.). https://www.acr.org/Clinical-Resources/Reporting-and-Data-Systems/Lung-Rads, accessed on 26.09.2024

Malhotra, J., Malvezzi, M., Negri, E., La Vecchia, C., & Boffetta, P. (2016). Risk factors for lung cancer worldwide. The European Respiratory Journal: Official Journal of the European Society for Clinical Respiratory Physiology, 48(3), 889–902. https://doi. org/10.1183/13993003.00359-2016

Marcus, P. M., Bergstralh, E. J., Fagerstrom, R. M., Williams, D. E., Fontana, R., Taylor, W. F., & Prorok, P. C. (2000). Lung cancer mortality in the Mayo Lung Project: impact of extended followup. Journal of the National Cancer Institute, 92(16), 1308–1316. https://doi.org/10.1093/jnci/92.16.1308

Mendoza, J., & Pedrini, H. (2020). Detection and classification of lung nodules in chest X‐ray images using deep convolutional neural networks. Computational Intelligence. An International Journal, 36(2), 370–401. https://doi.org/10.1111/coin.12241

Mikhael, P. G., Wohlwend, J., Yala, A., Karstens, L., Xiang, J., Takigami, A. K., Bourgouin, P. P., Chan, P., Mrah, S., Amayri, W., Juan, Y.-H., Yang, C.-T., Wan, Y.-L., Lin, G., Sequist, L. V., Fintelmann, F. J., & Barzilay, R. (2023). Sybil: A Validated Deep Learning Model to Predict Future Lung Cancer Risk From a Single Low-Dose Chest Computed Tomography. Journal of Clinical Oncology: Official Journal of the American Society of Clinical Oncology, 41(12), 2191–2200. https://doi.org/10.1200/ JCO.22.01345

Morgan, L., Choi, H., Reid, M., Khawaja, A., & Mazzone, P. J. (2017). Frequency of Incidental Findings and Subsequent Evaluation in Low-Dose Computed Tomographic Scans for Lung Cancer Screening. Annals of the American Thoracic Society, 14(9), 1450–1456. https://doi.org/10.1513/AnnalsATS.201612-1023OC

Murchison, J. T., Ritchie, G., Senyszak, D., Nijwening, J. H., van Veenendaal, G., Wakkie, J., & van Beek, E. J. R. (2022). Validation of a deep learning computer aided system for CT based lung nodule detection, classification, and growth rate estimation in a routine clinical population. PloS One, 17(5), e0266799. https://doi. org/10.1371/journal.pone.0266799

Nam, J. G., Ahn, C., Choi, H., Hong, W., Park, J., Kim, J. H., & Goo, J. M. (2021). Image quality of ultralow-dose chest CT using deep learning techniques: potential superiority of vendor-agnostic postprocessing over vendor-specific techniques. European Radiology, 31(7), 5139–5147. https://doi.org/10.1007/s00330-020-07537-7

Nam, J. G., Park, S., Hwang, E. J., Lee, J. H., Jin, K.-N., Lim, K. Y., Vu, T. H., Sohn, J. H., Hwang, S., Goo, J. M., & Park, C. M. (2019). Development and Validation of Deep Learning-based Automatic Detection Algorithm for Malignant Pulmonary Nodules on Chest Radiographs. Radiology, 290(1), 218–228. https://doi.org/10.1148/ radiol.2018180237

National Institute for Health and Care Excellence (NICE). (n.d.). Evidence standards framework for digital health technologies. https://www.nice.org.uk/corporate/ecd7, accessed on 26.09.2024

National Lung Screening Trial Research Team, Aberle, D. R., Adams, A. M., Berg, C. D., Black, W. C., Clapp, J. D., Fagerstrom, R. M., Gareen, I. F., Gatsonis, C., Marcus, P. M., & Sicks, J. D. (2011). Reduced lung-cancer mortality with low-dose computed tomographic screening. The New England Journal of Medicine, 365(5), 395–409. https://doi.org/10.1056/NEJMoa1102873

Park, H., Ham, S.-Y., Kim, H.-Y., Kwag, H. J., Lee, S., Park, G., Kim, S., Park, M., Sung, J.-K., & Jung, K.-H. (2019). A deep learning-based CAD that can reduce false negative reports: A preliminary study in health screening center. RSNA 2019. RSNA 2019. https://archive.rsna.org/2019/19017034.html

Pehrson, L. M., Nielsen, M. B., & Ammitzbøl Lauridsen, C. (2019). Automatic Pulmonary Nodule Detection Applying Deep Learning or Machine Learning Algorithms to the LIDC-IDRI Database: A Systematic Review. Diagnostics (Basel, Switzerland), 9(1). https://doi.org/10.3390/diagnostics9010029

Qi, L.-L., Wang, J.-W., Yang, L., Huang, Y., Zhao, S.-J., Tang, W., Jin, Y.-J., Zhang, Z.-W., Zhou, Z., Yu, Y.-Z., Wang, Y.-Z., & Wu, N. (2021). Natural history of pathologically confirmed pulmonary subsolid nodules with deep learning-assisted nodule segmentation. European Radiology, 31(6), 3884–3897. https://doi. org/10.1007/s00330-020-07450-z

Qi, L.-L., Wu, B.-T., Tang, W., Zhou, L.-N., Huang, Y., Zhao, S.-J., Liu, L., Li, M., Zhang, L., Feng, S.-C., Hou, D.-H., Zhou, Z., Li, X.- L., Wang, Y.-Z., Wu, N., & Wang, J.-W. (2020). Long-term followup of persistent pulmonary pure ground-glass nodules with deep learning-assisted nodule segmentation. European Radiology, 30(2), 744–755. https://doi.org/10.1007/s00330-019-06344-z

Raghu, V. K., Walia, A. S., Zinzuwadia, A. N., Goiffon, R. J., Shepard, J.-A. O., Aerts, H. J. W. L., Lennes, I. T., & Lu, M. T. (2022). Validation of a Deep Learning-Based Model to Predict Lung Cancer Risk Using Chest Radiographs and Electronic Medical Record Data. JAMA Network Open, 5(12), e2248793. https://doi. org/10.1001/jamanetworkopen.2022.48793

Ravin, C. E., & Chotas, H. G. (1997). Chest radiography. Radiology, 204(3), 593–600. https://doi.org/10.1148/radiology.204.3.9280231

Revel, M.-P., Bissery, A., Bienvenu, M., Aycard, L., Lefort, C., & Frija, G. (2004). Are two-dimensional CT measurements of small noncalcified pulmonary nodules reliable? Radiology, 231(2), 453–458. https://doi.org/10.1148/radiol.2312030167

Röhrich, S., Heidinger, B. H., Prayer, F., Weber, M., Krenn, M., Zhang, R., Sufana, J., Scheithe, J., Kanbur, I., Korajac, A., Pötsch, N., Raudner, M., Al-Mukhtar, A., Fueger, B. J., Milos, R.-I., Scharitzer, M., Langs, G., & Prosch, H. (2023). Impact of a content-based image retrieval system on the interpretation of chest CTs of patients with diffuse parenchymal lung disease. European Radiology, 33(1), 360–367. https://doi.org/10.1007/ s00330-022-08973-3

Sands, J., Tammemägi, M. C., Couraud, S., Baldwin, D. R., Borondy-Kitts, A., Yankelevitz, D., Lewis, J., Grannis, F., Kauczor, H.-U., von Stackelberg, O., Sequist, L., Pastorino, U., & McKee, B. (2021). Lung Screening Benefits and Challenges: A Review of The Data and Outline for Implementation. Journal of Thoracic Oncology: Official Publication of the International Association for the Study of Lung Cancer, 16(1), 37–53. https://doi. org/10.1016/j.jtho.2020.10.127

Setio, A. A. A., Traverso, A., de Bel, T., Berens, M. S. N., van den Bogaard, C., Cerello, P., Chen, H., Dou, Q., Fantacci, M. E., Geurts, B., Gugten, R. van der, Heng, P. A., Jansen, B., de Kaste, M. M. J., Kotov, V., Lin, J. Y.-H., Manders, J. T. M. C., Sóñora-Mengana, A., García-Naranjo, J. C., … Jacobs, C. (2017). Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The LUNA16 challenge. Medical Image Analysis, 42, 1–13. https://doi.org/10.1016/j.media.2017.06.015

Siegel, D. A., Fedewa, S. A., Henley, S. J., Pollack, L. A., & Jemal, A. (2021). Proportion of Never Smokers Among Men and Women With Lung Cancer in 7 US States. JAMA Oncology, 7(2), 302–304. https://doi.org/10.1001/jamaoncol.2020.6362

Singh, R., Kalra, M. K., Homayounieh, F., Nitiwarangkul, C., McDermott, S., Little, B. P., Lennes, I. T., Shepard, J.-A. O., & Digumarthy, S. R. (2021). Artificial intelligence-based vessel suppression for detection of sub-solid nodules in lung cancer screening computed tomography. Quantitative Imaging in Medicine and Surgery, 11(4), 1134–1143. https://doi.org/10.21037/ qims-20-630

Smieliauskas, F., MacMahon, H., Salgia, R., & Shih, Y.- C. T. (2014). Geographic variation in radiologist capacity and widespread implementation of lung cancer CT screening. Journal of Medical Screening, 21(4), 207–215. https://doi. org/10.1177/0969141314548055

Sung, H., Ferlay, J., Siegel, R. L., Laversanne, M., Soerjomataram, I., Jemal, A., & Bray, F. (2021). Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA: A Cancer Journal for Clinicians, 71(3), 209–249. https://doi.org/10.3322/caac.21660

Sun, S., Schiller, J. H., & Gazdar, A. F. (2007). Lung cancer in never smokers--a different disease. Nature Reviews. Cancer, 7(10), 778–790. https://doi.org/10.1038/nrc2190

Tammemägi, M. C., Katki, H. A., Hocking, W. G., Church, T. R., Caporaso, N., Kvale, P. A., Chaturvedi, A. K., Silvestri, G. A., Riley, T. L., Commins, J., & Berg, C. D. (2013). Selection criteria for lung-cancer screening. The New England Journal of Medicine, 368(8), 728–736. https://doi.org/10.1056/NEJMoa1211776

Thai, A. A., Solomon, B. J., Sequist, L. V., Gainor, J. F., & Heist, R. S. (2021). Lung cancer. The Lancet, 398(10299), 535–554. https:// doi.org/10.1016/S0140-6736(21)00312-3

The Royal College of Radiologists. (2022). Clinical Radiology Workforce Census.

Toumazis, I., de Nijs, K., Cao, P., Bastani, M., Munshi, V., Ten Haaf, K., Jeon, J., Gazelle, G. S., Feuer, E. J., de Koning, H. J., Meza, R., Kong, C. Y., Han, S. S., & Plevritis, S. K. (2021). Costeffectiveness Evaluation of the 2021 US Preventive Services Task Force Recommendation for Lung Cancer Screening. JAMA Oncology, 7(12), 1833–1842. https://doi.org/10.1001/jamaoncol.2021.4942

van Riel, S. J., Sánchez, C. I., Bankier, A. A., Naidich, D. P., Verschakelen, J., Scholten, E. T., de Jong, P. A., Jacobs, C., van Rikxoort, E., Peters-Bax, L., Snoeren, M., Prokop, M., van Ginneken, B., & Schaefer-Prokop, C. (2015). Observer Variability for Classification of Pulmonary Nodules on Low-Dose CT Images and Its Effect on Nodule Management. Radiology, 277(3), 863–871. https://doi.org/10.1148/radiol.2015142700

Wang, C., Li, J., Zhang, Q., Wu, J., Xiao, Y., Song, L., Gong, H., & Li, Y. (2021). The landscape of immune checkpoint inhibitor therapy in advanced lung cancer. BMC Cancer, 21(1), 968. https:// doi.org/10.1186/s12885-021-08662-2

Wang, Y., Midthun, D. E., Wampfler, J. A., Deng, B., Stoddard, S. M., Zhang, S., & Yang, P. (2015). Trends in the proportion of patients with lung cancer meeting screening criteria. JAMA: The Journal of the American Medical Association, 313(8), 853–855. https://doi.org/10.1001/jama.2015.413

Wood, D. E., Kazerooni, E. A., Baum, S. L., Eapen, G. A., Ettinger, D. S., Hou, L., Jackman, D. M., Klippenstein, D., Kumar, R., Lackner, R. P., Leard, L. E., Lennes, I. T., Leung, A. N. C., Makani, S. S., Massion, P. P., Mazzone, P., Merritt, R. E., Meyers, B. F., Midthun, D. E., … Hughes, M. (2018). Lung Cancer Screening, Version 3.2018, NCCN Clinical Practice Guidelines in Oncology. Journal of the National Comprehensive Cancer Network: JNCCN, 16(4), 412–441. https://doi.org/10.6004/jnccn.2018.0020

Wu, B., Zhou, Z., Wang, J., & Wang, Y. (2018). Joint learning for pulmonary nodule segmentation, attributes and malignancy prediction. 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), 1109–1113. https://doi.org/10.1109/ ISBI.2018.8363765

Wyker, A., & Henderson, W. W. (2022). Solitary Pulmonary Nodule. StatPearls Publishing.

Yoo, H., Lee, S. H., Arru, C. D., Doda Khera, R., Singh, R., Siebert, S., Kim, D., Lee, Y., Park, J. H., Eom, H. J., Digumarthy, S. R., & Kalra, M. K. (2021). AI-based improvement in lung cancer detection on chest radiographs: results of a multi-reader study in NLST dataset. European Radiology, 31(12), 9664–9674. https://doi. org/10.1007/s00330-021-08074-7

Zahnd, W. E., & Eberth, J. M. (2019). Lung Cancer Screening Utilization: A Behavioral Risk Factor Surveillance System Analysis. American Journal of Preventive Medicine, 57(2), 250–255. https:// doi.org/10.1016/j.amepre.2019.03.015